Accurate underwriting requires precise estimation and modeling of the insured’s risk. Traditionally, human experts achieve accuracy by analyzing data like vehicle reports and fleet stats. While most insurance companies use a handful of data points, Nirvana improves underwriting by leveraging IoT or intelligent sensor data. Specifically, we use telematics data like driver behavior, vehicle location, vehicle activity, and engine diagnostics, which we continuously receive and process from IoT devices attached to the insured vehicles. Analytics of this data gives us previously unexplored insights into the risk and behavior of our client’s fleets. One of our goals is to estimate risk as quickly as possible to minimize the turnaround time we take from the moment we receive an application to generating a quote.

The challenge, of course, lies in processing very large data volumes with efficiency and accuracy. Traditional MLOps practices have a handful of models, trained once and then used across all customers. At Nirvana, we take the unique approach of running our ML workflows on a per-customer basis. We train and deploy a new model on each customer’s data for the best fit. We then use the per-customer model to generate insights such as vehicle-level and driver-level risk profiles to segment the risk. Once trained, our models output insights continuously per week or month to generate trends.

However, training models per customer create unique challenges for our engineering team. For example, we have to think about how to:

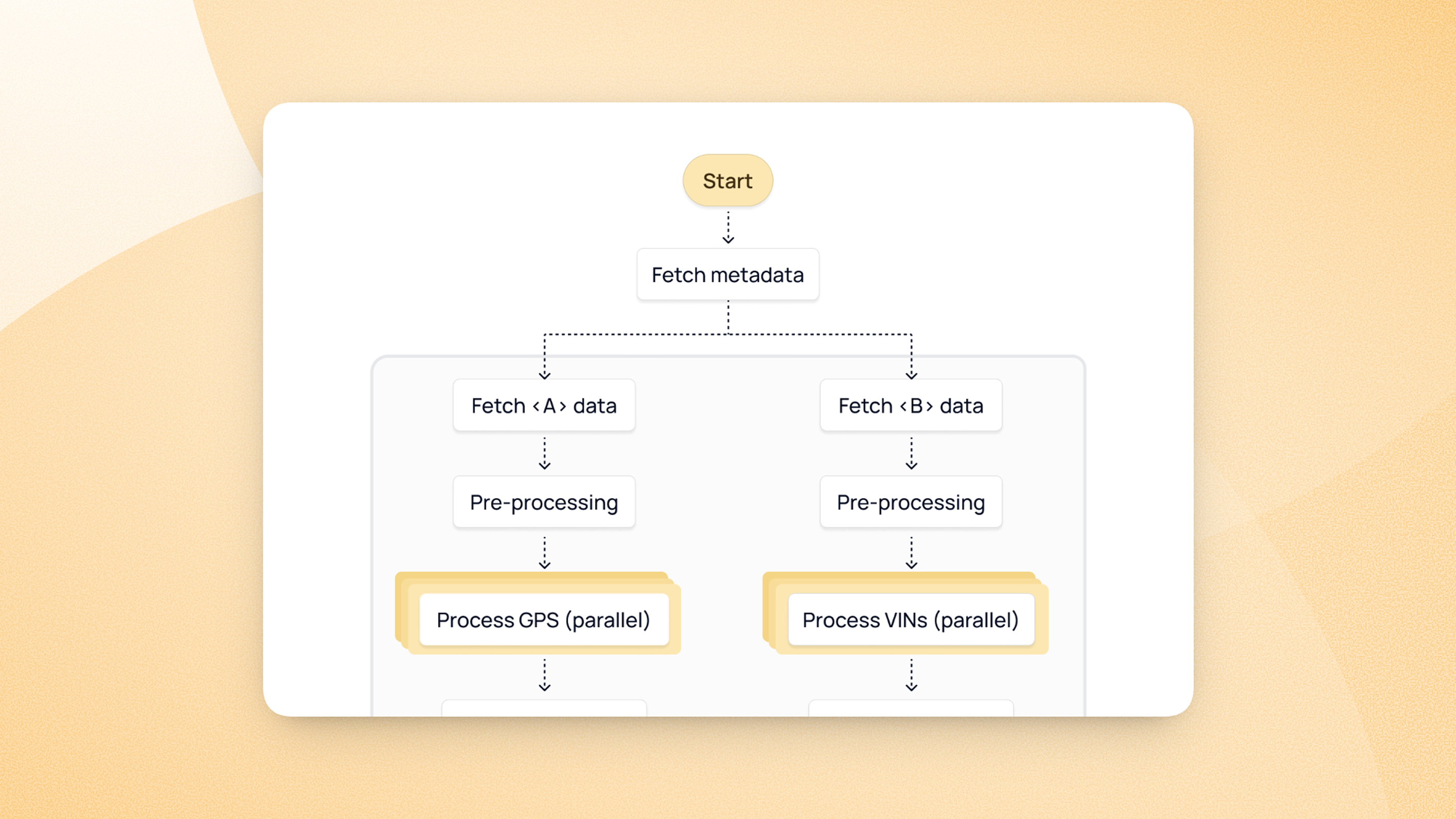

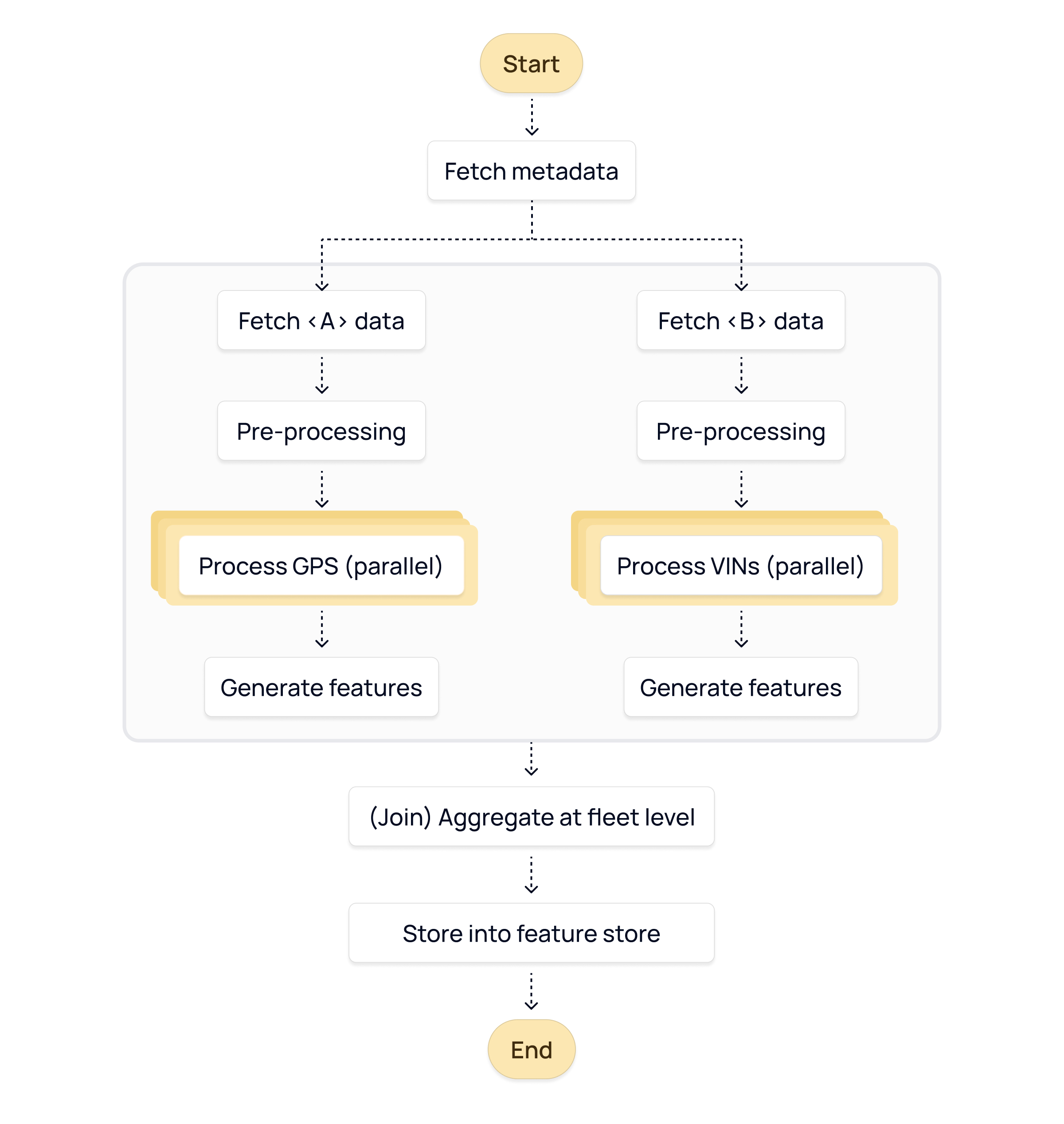

- Run some data pipeline steps concurrently and manage data flow between different steps.

- Version both the pipelines themselves and the artifacts produced by them.

- Automate the entire MLOps stack while managing monitoring and configuration drift challenges.

This article dives deeper into how we solve the first challenge of MLOps — writing data pipelines that scale and infrastructure to scale them.

Adopting the dataflow paradigm

We adopted the dataflow paradigm that breaks down a data pipeline into logical steps and expresses data or control dependencies among them as a graph. The various steps and data dependencies create a directed acyclic graph(DAG). An orchestrator executes the graph, optimizing run time by automatically deducing which steps can run in parallel.

Running all the steps in a single machine may be suitable for local or development testing but unsuitable for large-scale production. Consider the problem of running ten steps in parallel, each of which requires a (4vCPU, 32GB) machine. Therefore, an orchestrator is associated with a compute cluster of several machines capable of running the steps.

So, to run the steps, the orchestrator places them into one of the available machines in the compute cluster. However, even for a single pipeline, our workloads are not uniform and can have frequent peaks of high resource requirements. Therefore, we require the ability to provision and deprovision compute responsively according to pipeline requirements.

What we were looking for in our solution

Our criteria while researching potential solutions were as follows.

Application framework

We required an application framework / SDK for data scientists to author their workflows and pipelines. We wanted the framework to be simple and infra-agnostic. Here simple means concerning usage and not expressiveness. Various control flow constructs such as branching, dynamic fan-out, and conditionals are desirable. We wanted a framework that could provide a self-serve and holistic experience for our data scientists, leading to faster development iterations.

Orchestrator

The framework would output a DAG, which a production-grade orchestrator must then interpret and run. All software and hardware can crash. But faults in the orchestrator are considered critical. Therefore we needed a highly-available orchestrator.

On-demand infrastructure

Finally, the orchestrator needs a compute cluster to run on. We were looking for scalability of computing clusters and on-demand pricing. We were willing to tolerate some degree of faults through retries.

Choosing the tech stack

We explored multiple solutions. All major cloud vendors offer a production-grade orchestrator and an accompanying SDK. However, cloud-native experience limits local development and testing, ultimately reducing development velocity. We needed an infra-agnostic framework that runs on both local and cloud. We also wanted support for storing intra-pipeline artifacts, that is, artifacts produced by one step exclusively for some other step, as an intermediate output. Most cloud-native frameworks defer this responsibility to the end developer by requiring them to fiddle around with cloud-storage solutions manually.

Metaflow as the application framework

After some testing, we closed in on the Metaflow ML framework. Metaflow’s philosophy of optimizing for the development experience of data scientists aligned closely with our own goals. In addition, the framework:

- Is infra-agnostic, so the same code runs as-is on both local and cloud

- Provides an excellent interface for passing data artifacts between steps

- Has excellent debugging capabilities

Our data scientists can open a notebook and investigate any failed production or local run. They can look into artifacts produced by steps, their logs, and run time and resume the flow run from a single step to reproduce an issue. We optimized our infrastructure to leverage these features further.

However, Metaflow does have limitations in managing multi-version deployments and end-to-end automation, including deployment lifecycle, triggering, and monitoring. To fill these gaps, we have developed and continue refining in-house infrastructure layers over Metaflow.

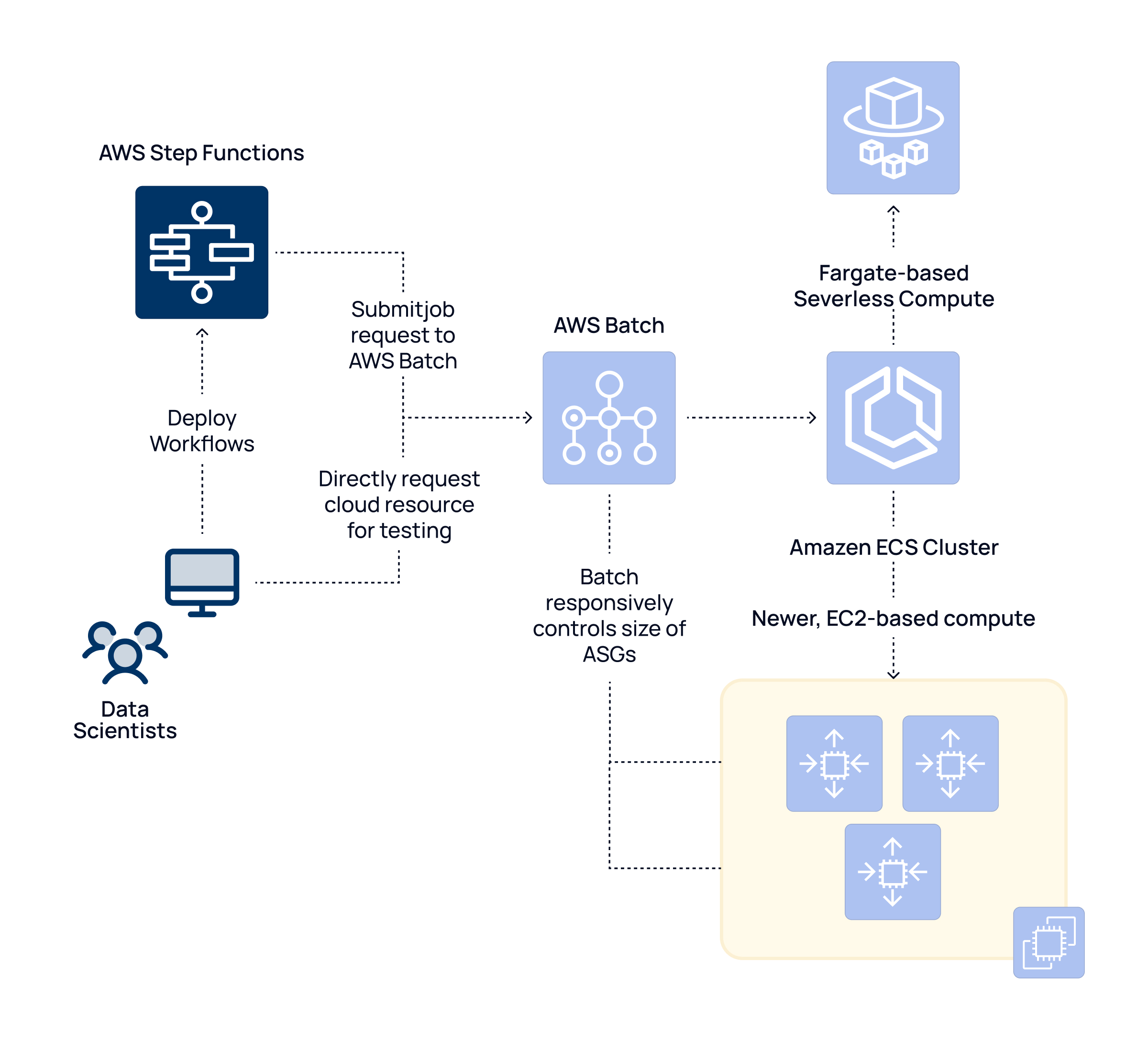

AWS Step Functions as the Orchestrator

For the orchestrator, the decision path was much more straightforward. High availability requirements immediately prompted us to explore a cloud-native fully managed and/or serverless orchestrator. As most of our cloud infrastructure is in AWS, we decided to use AWS Step Functions, which ticks all the boxes. Additionally, Metaflow has native support for compiling the flow spec into a Step Functions state machine spec, so we did not have to do anything extra.

Though the most critical, the orchestrator is also the most inexpensive component in the stack. Despite running over multiple thousands of monthly pipelines for our customers, we are paying <1% of our total MLOps infra cost on Step Functions. So far, we have not faced any scalability issues or downtimes due to Step Functions, so the high availability requirement is well fulfilled.

AWS Batch over ECS Fargate as the compute cluster

Our choice of the Metaflow framework forced us to choose AWS Batch over Amazon Elastic Container Sevice(ECS) as our compute cluster. Though we had little choice in determining the abstraction layer, we have significant control over how AWS Batch provisions resources.

Initially, we gave in to the serverless vision by running AWS Batch over ECS Fargate. AWS was fully managing everything from hardware to the control plane. We were only paying for the computing hours we used.

Architecture overview

To put everything in perspective, our architecture worked as follows:

- Our data scientists wrote dataflows in Python using Metaflow SDK.

- Metaflow compiled the dataflow into a state machine spec suitable to run through AWS Step Functions.

- AWS Step Functions sent job scheduling requests to AWS Batch.

- AWS Batch, as a thin abstraction layer, defers task placement to Amazon ECS.

By running our ECS cluster over Fargate, our tasks were placed on a fully managed hardware platform we did not have to manage.

Challenges with Fargate-based compute

In a couple of months, we uncovered some difficulties with the compute cluster management. Firstly, Fargate costs more per vCPU per hour than a comparable EC2 instance. Secondly, as of writing, Fargate does not support GPUs, so steps that required GPUs could not be run. AWS is also silent on details on network bandwidth and disk bandwidth available to tasks running on Fargate. These details become important during the optimization phases.

But most importantly, we faced a unique challenge with Fargate related to the time it takes to pull the container image, which ultimately runs the Python code data scientists wrote. Due to a dense network of package dependencies, our Python container images are around 2GBs on average. Empirically it takes anywhere between 1–2 minutes for a Fargate task to start running due to the image pull time. The start delay was becoming a challenge for our MLOps workflows which are not compute-heavy but deal with things like writing to a feature store or doing lightweight processing.

Switching from Fargate to EC2

We decided to migrate from Fargate to Amazon EC2 for our MLOps workflows despite the expense of increased management.

The migration was challenging and required us to study AWS Batch internals and how Batch does the scheduling and autoscaling of EC2 instances responsively. Unfortunately, public documentation on Batch internals is scarce, so it was a game of trial and error.

After some experimenting, we solved our main challenge and decreased our image pull time by leveraging disk caching of images and enabling GPU. However, we occasionally see infrastructural errors on AWS, primarily related to networking issues. We have retries on all our workflow steps to mitigate these.

We aim to keep the instance utilization over 70% to beat Fargate regarding raw dollar cost.

For the future

As exciting as MLOps infrastructure work is, our unique requirement of running MLOps pipelines per customer also imposes challenges requiring specialized solutions. Our engineering team continuously tries to improve the infrastructure by optimizing for our use cases or adding new features keeping in mind our core tenets: automation, speed & scalability. In future blog posts, we will talk about some of the solutions we’ve built — like our in-house caching layer to extract better performance while managing cost, a programmatic data contract layer between DS/ML and engineering teams, and much more.